DeepMind this morning reannounced their partnership with the Royal Free Hospital. Updates are at the bottom – details are in the 9:50 and 10:10 updates.

There’s apparently a new legal agreement to copy exactly the same data that caused so much controversy over the summer. We have not yet seen the new legal agreement, so can’t comment on what it permits or disallows.

Responding to the press release, Phil Booth, Coordinator of medConfidential said:

“Our concern is that Google gets data on every patient who has attended the hospital in the last 5 years and they’re getting a monthly report of data on every patient who was in the hospital, but may now have left, never to return.

“What your Doctor needs to be able to see is the up to date medical history of the patient currently in front of them.

“The Deepmind gap, because the patient history is up to a month old, makes the entire process unreliable and makes the fog of unhelpful data potentially even worse.

As Deepmind publish the legal agreements and PIA, we will read them and update comments here.

8:50am update. The Deepmind legal agreement was expected to be published at midnight. As far as we can tell, it wasn’t. Updated below.

TechCrunch have published a news article, and helpfully included the DeepMind talking points in a list. The two that are of interest (emphasis added):

- An intention to develop what they describe as “an unprecedented new infrastructure that will enable ongoing audit by the Royal Free, allowing administrators to easily and continually verify exactly when, where, by whom and for what purpose patient information is accessed.” This is being built by Ben Laurie, co-founder of the OpenSSL project.

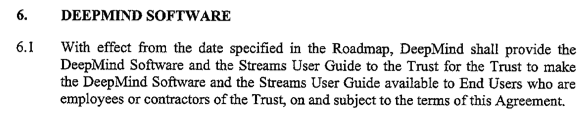

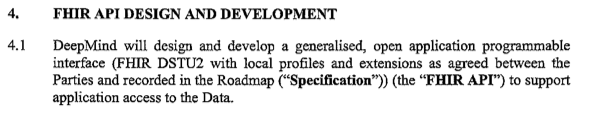

- A commitment that the infrastructure that powers Streams is being built on “state-of-the-art open and interoperable standards,” which they specify will enable the Royal Free to have other developers build new services that integrate more easily with their systems. “This will dramatically reduce the barrier to entry for developers who want to build for the NHS, opening up a wave of innovation — including the potential for the first artificial intelligence-enabled tools, whether developed by DeepMind or others,” they add.

Public statements about streams (an iPhone app for doctors) don’t seem to explain what that is. What is it?

9:30 update: The Deepmind website has now been updated. We’re reading.

The contracts are no longer part of the FAQ, they’re now linked from the last paragraph of text. (mirrored here)

9:40 update: MedConfidential is greatly helped in its work by donations from people like you.

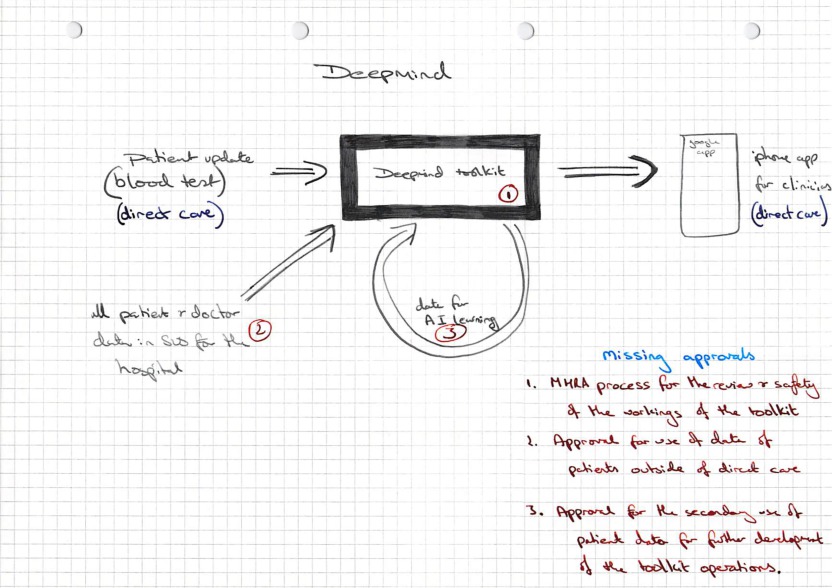

9:50 update: Interesting what is covered by what…

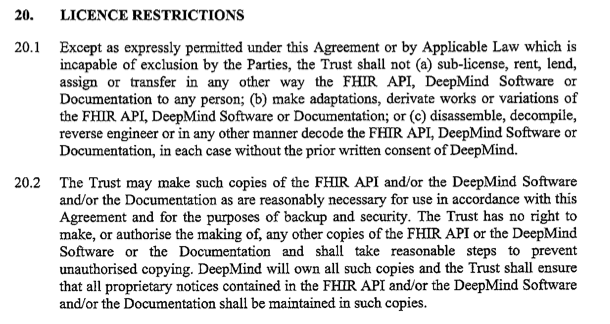

10:10 update: What data does the DeepMind FIHR API cover? What is the Governance of that API? Is it contractually, legally, and operationally independent of the Streams app?

(it’s clearly none of those things, as the above screenshots say).

Deepmind have made great play of their agreement being safe, but consent is determined in a google meeting room, and the arrangements for the “FIHR API” are secretive and far from transparent.

There is likely to only be one more update today around 1pm. Unless Google make an announcement that undermines their contractual agreements.

1pm update: The original information sharing agreement was missing Schedule 1, and has been updated.

3:30 update: DeepMind have given some additional press briefings to Wired (emphasis added):

“Suleyman said the company was holding itself to “an unprecedented level of oversight”. The government of Google’s home nation is conducting a similar experiment…

““Approval wasn’t actually needed previously because we were really only in testing and development, we didn’t actually ship a product,” which is what they said last time, and MHRA told them otherwise.

Apparently “negative headlines surrounding his company’s data-sharing deal with the NHS are being “driven by a group with a particular view to pedal”.”. The headlines are being driven by the massive PR push they have done since 2:30pm on Monday when they put out a press release which talked only about the app, and mentioned data as an aside only in the last sentence of the first note to editors. – Beware of the leopard.

As to our view, MedConfidential is an independent non-partisan organisation campaigning for confidentiality and consent in health and social care, which seeks to ensure that every flow of data into, across and out of the NHS and care system is consensual, safe and transparent. Does Google Inc disagree with that goal?